AI in Higher Education Webinar Series

3: Society

1 May 2025

Here are the slides from James Ransom’s presentation at the event on AI in society, organised by the National Centre for Entrepreneurship in Education (NCEE). Links are included for key texts. Contact details are at the end.

Hello everyone and thank you for joining us.

In the previous two sessions I talked quite a bit and we only had a short time at the end for discussion. The good news is that today my presentation is shorter (around 25 mins), there are some polls, and then we’ll open it up for you to share your views.

So here’s how today’s session will be structured. First, we are going to talk about a computer game. Second, we’re going to very quickly recap where we got to in the previous two sessions. Third, we are going to visit Sweden. And then finally we’re going to dive into five scenarios and I’ll get your feedback on these.

Sim University

A few years ago a Dutch think tank asked me to write an article about universities and cities. I decided to write a half-joking description of a game called Sim University for them. Some of you may have heard of or even played the SimCity games which were especially popular in the 1990s. You had to build and grow and manage a city, balancing all kinds of decisions, dealing with crises and helping it to grow.

In my idea for Sim University you became the vice-chancellor or the president and you would have to manage your own university. You could choose what goals you had: top the league tables and attract international students, or become a pillar of the local community helping the disadvantaged and working with small businesses.

You would have to deal with the Board of Governors that are harassing you about budgets, and the Ministry of Education are on your back. Local newspapers are watching what you’re doing, and students are looking to have fun and then get jobs after graduating.

You could build shiny buildings, or open overseas campuses and incubation centres. Maybe you have a star academic that’s causing trouble. Do you fire them or do you promote them? You would have to guide your university through lots of crises that pop up.

In writing this article I did some research into the original SimCity and found a couple of quite interesting things.

First, it was pretty realistic, and urban planners used it to test ideas. You could explore the relationship between things like crime rates and expanding rail networks. Apparently the traffic models were more advanced than those most traffic engineers used in real life.

Second, SimCity has been used as a test of competence for leaders.

In 1990, there was a Democratic primary election in Rhode Island, in the US, and the local newspaper invited five candidates for mayor to play a game of SimCity against each other. One didn’t take it very seriously, and built loads of police stations, swapped the electric power plant for a nuclear one and bulldozed the churches. She went on to lose the election.

In 2002, the candidates for mayor in Warsaw, Poland, played SimCity. The man who won that competition ended up winning the election and eventually became the president of Poland.

Now, I’m not necessarily suggesting that we should develop Sim University and it should be part of the selection process for university vice chancellors.

But I do think we can learn from testing ideas in a playful setting and seeing where we end up. In the SimCity game, you could run different scenarios like an alien landing in the city, or you had to try and develop a city in an earthquake zone and you would then have to deal with all the issues and complexities that come out of this. We will return to Sim University in the final part of this presentation.

Dr James Ransom

About me

To introduce myself, for those of you who don’t know me, I am James Ransom, I am Head of Research at NCEE. I’m also a Senior Visiting Fellow at UCL Institute of Education. Although I’ve been doing work on AI, my background is primarily on the role of universities in tackling societal challenges. But, in my opinion, these issues are actually quite closely linked.

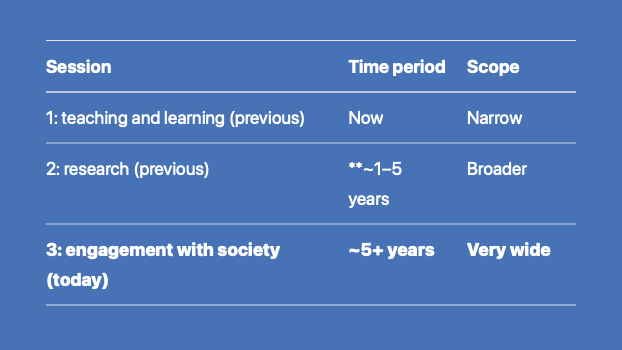

| Session | Time period | Scope |

|---|---|---|

| 1: teaching and learning (previous) | Now | Narrow |

| 2: research (previous) | **~1–5 years | Broader |

| 3: engagement with society (today) | ~5+ years | Very wide |

Now for a brief recap of our previous sessions. You will have seen this before: we’re now at the final session, looking further ahead and most broadly.

The first session focused on AI and teaching and learning in universities. We had some case studies of how universities are integrating AI, some successfully, others less so. This session was focused on the state of play right now. The conclusion was that some universities risk getting caught up in paranoia about student cheating, or getting excited about using AI to cut costs, but instead they need to take a step back and look at AI strategically.

Some of this was based on a paper that I wrote with a colleague which has since been published and is available on NCEE’s website together with all of the slides.

One of the takeaways from session 1 was that even if AI doesn’t evolve further, there’s still plenty of opportunities and challenges for universities to overcome.

The second session was focused on research. Here we got a bit more speculative and started to look a few years ahead. Whilst I didn’t take a definitive position on whether we’re likely to see a huge explosion in AI capability, we did look through what this might mean for research if this did happen.

My recommendation for universities was to think about what it might mean if there’s any chance at all of a jump in capabilities coming. I’m not necessarily talking about artificial general intelligence, just an evolution in capability.

Now this does mean that there’s a wide range of possibilities and there’s a great degree of uncertainty.

Today’s session will be less focused on what is happening and instead try to take a more operational focus. We’ll take 5 scenarios and think about potential roadmaps going forwards.

Key takeaway

Universities will fail in their civic duty if they do not engage with possible future trajectories of AI

Each of the previous sessions have had a key takeaway. This is today’s.

Now, this might mean that your university has a serious look at what may happen in the future and decide that the risk, opportunity and challenge is minimal. You reflect on this and then develop strategy accordingly. This is fine.

It might be that your university decides there’s a small chance of rapid change and also invests and plans accordingly. What I would deem a failure is a university that does not consider the possibility of great impact on society, on their neighbours, from AI and is instead narrowly focused on, for example, the implications of AI for student essay writing.

(Universities have always had an important societal role as a site of debate, of discussion, of shaping the future as well as reflecting upon it, as a critical friend to civil society, to government, to other bodies. This needs to continue.)

Let’s now visit Sweden.

Now, I don’t mean to pick on Sweden here. I studied in Sweden for two years and I have very fond memories. What makes Sweden a good case study here is that it has a strong and relatively well-supported higher education sector. And secondly, there’s been some interesting recent research.

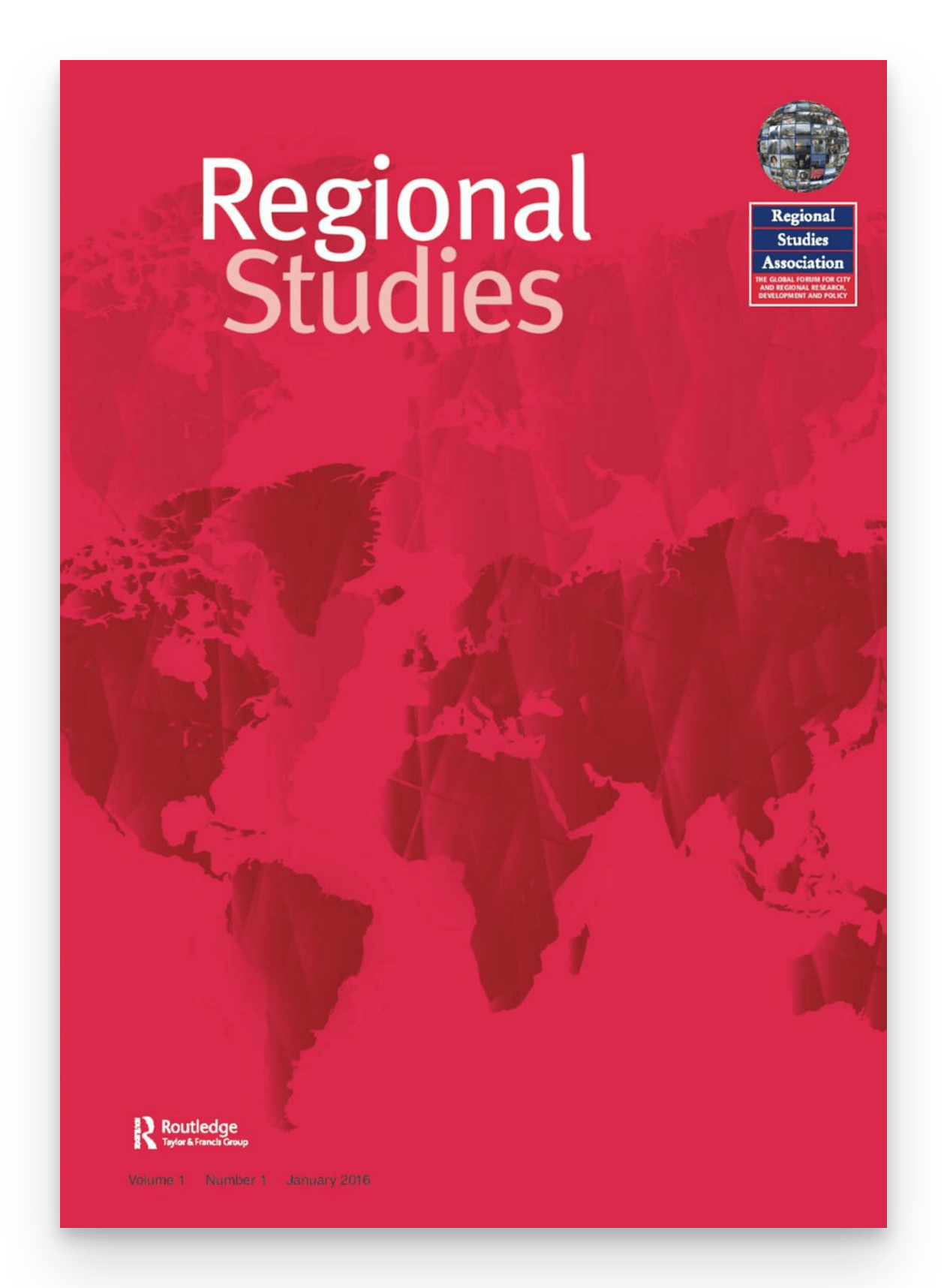

“The local economic impact of the Swedish higher education system”1

This article was published in the prestigious Regional Studies journal earlier this year by researchers at LSE and the University of Southampton.

Around 30 public higher education institutions are analysed. These are a mixture of research universities and those with a vocational focus. Sweden had a deliberate policy spreading these through the regions, designed to be at the heart of local innovation systems.

One of the arguments that universities and their supporters have shouted loud about is how they are central to economic development, that they have a huge role in helping society, in terms of employment and business support, and making towns and cities nicer places to live.

But this article persuasively makes the case that universities are not always fulfilling this function. There are three main conclusions.

-

Rodríguez-Pose, A., & Wang, H. (2025). The local economic impact of the Swedish higher education system. Regional Studies. https://doi.org/10.1080/00343404.2025.2462712 ↩︎

1. Greater research intensity correlates with a negative effect on local income

First, greater research intensity correlates with a negative effect on local income.

2. Higher education institutions do not significantly contribute to local human capital

Second, higher education institutions do not significantly contribute to local human capital.

3. Limited collaboration with local business and a lack of alignment with industry needs

And third, the translation of innovation and knowledge faces many difficulties, including limited collaboration with local business and a lack of alignment with industry needs.

I’m discussing this for two main reasons. One is that we should never take for granted that universities are automatically important development actors in their community.

Some spend small fortunes on economic impact reports to try and demonstrate this, but these studies don’t tell the whole story, and I believe are mostly meaningless for local communities.

This is coupled with endless self-promotion, which is a byproduct of the global higher education system, league tables and so on. And it’s greatly complicated by the financial crisis that universities are currently facing.

The second is that there is an opportunity in here and one that is closely related to our discussion of AI. Universities should be playing a leading role in discussions about the impact of AI on society. They should be shaping policies and having wide-ranging discussions about how this may affect the future.

What if we’re in the middle of a small window right now to shape how the next 10 years or so will pan out?

And I think this is a local, regional effort. Universities are not alone in trying to understand what AI may mean. They need to be listening to and working with local businesses, local government, healthcare providers, on what this all means.

As a result, there’s an opportunity for this third pillar of societal engagement to be really important.

We’re now going to wrap everything up by exploring five scenarios. As I go through them, think about what resonates with you – what sounds plausible? Make note of this because we’ll come back to your thoughts at the end.

The 5 scenarios we’ll go through are drawn from an annex in this publication by the UK’s Government Office for Science, published in late 2023.

A few things to note before we dive in. First, the authors are UK-based and there have been numerous UK academics and even a UK vice chancellor who were part of the group that informed it. But I think it’s pretty applicable anywhere.

Second, there’s a focus on risk rather than opportunity so bear that in mind, plus the focus is on ‘Frontier AI’ which they define as the most capable models available.

Third, these are scenarios that take place in the year 2030, so 5 years from now or 7 years from when this was published.

Fourth, these are not exhaustive: they’re just a flavour of some of the possibilities and they are not predictions. And they’re not on a sliding scale, so they don’t go from ‘no impact’ to ‘lots of impact’ for example; they’re all quite different.

Fifth, I’ve extrapolated the impacts on universities from the scenarios, so you won’t find this in the original document. Each scenario would demand a different university preparation and response, and I’ve done some initial thinking on this.

Scenario 1: Unpredictable Advanced AI

Scenario 1: Unpredictable Advanced AI

In the late 2020s, new open-source models emerge, capable of completing a wide range of tasks with startling autonomy and agency.

Scenario 1: Unpredictable Advanced AI

These agents can complete complex tasks that require planning and reasoning, and can interact with one another flexibly. Once a task is set, they devise a strategy with sub-goals, learning new skills or how to use other software.

Scenario 1: Unpredictable Advanced AI

AI-based cyber-attacks on infrastructure and public services became significantly more frequent and severe.

Scenario 1: Unpredictable Advanced AI

When powerful new AI is released, skills inequalities widen. Start-ups with higher skilled workers and a higher risk appetite quickly surge ahead and disrupt existing markets.

Scenario 1: Unpredictable Advanced AI

One cause for optimism is a series of rapid science discoveries enabled by academics adopting new AI tools.

What does this mean for universities?

Research breakthroughs

Resilient infrastructure

Skills divide

- Open source means universities and academics can use these models directly and freely, and there are research breakthroughs.

- Cyber attacks on infrastructure also affect universities, requiring investment in defence and resilience, and supporting partners.

- There’s a widening and rapidly changing skills divide. How do universities respond?

Scenario 2: AI disrupts the workforce

Scenario 2: AI disrupts the workforce

Concerns over the exhaustion of high-quality data led labs to acquire or synthesise new datasets tailored for specific tasks, leading to progress in narrow capabilities.

Scenario 2: AI disrupts the workforce

By 2030, the most extreme impacts are confined to a subset of sectors, but this still triggers a public backlash, starting with those whose work is disrupted, and spilling over into a fierce public debate about the future of education and work.

Scenario 2: AI disrupts the workforce

By 2030, there is significant deployment of AI across the economy, driven by improvements in capability and the opportunity this offers to reduce costs.

Scenario 2: AI disrupts the workforce

This is most highly concentrated in certain sectors, e.g. IT, accounting, transportation, and in the biggest companies who have the resources to deploy new systems.

Scenario 2: AI disrupts the workforce

This transition favours workers with the skills to oversee and fine-tune models (a new class of ‘AI managers’), resulting in greater inequality. Public concern focusses on the economic and societal impacts, mainly rising unemployment and poverty.

What does this mean for universities?

Massive reskilling

Research moves

Public debate

- Significant job losses in particular sectors means massive government and public demand for reskilling and adult education

- AI systems are narrow and owned by a few large companies. This has implications for university research, which – in these domains – is increasingly supported by big labs

- There is fierce public debate, including around things like UBI. What role do universities play as a space for this debate, for critique, for policy support, for evidence and analysis?

Scenario 3: AI ‘wild west’

Scenario 3: AI ‘wild west’

Throughout the 2020s there have been moderate improvements in AI capability, particularly generative AI, such as creation of long-form, high-quality video content. It is now easy for users to create content that is almost impossible to distinguish from human-generated output.

Scenario 3: AI ‘wild west’

There is a diverse AI market in 2030 - big tech labs compete alongside start-ups and open-source developers. Some of the most advanced models are released by authoritarian states.

Scenario 3: AI ‘wild west’

A big increase in misuse of AI causes societal unrest as many members of the public fall victim to organised crime. Businesses also have trade secrets stolen on a large scale, causing economic damage.

Scenario 3: AI ‘wild west’

There are job losses from automation in areas like computer programming, but this is offset to some extent by the creation of diverse new digital sectors and platforms based on AI systems, and the productivity benefits for those who augment their skills with AI.

Scenario 3: AI ‘wild west’

However, concerns of an unemployment crisis are starting to grow due to the ongoing improvements in AI capability.

What does this mean for universities?

Business support

Changing markets

Eroded trust

- Businesses need support and guidance. Universities are also targets.

- Again, support is needed for those who have lost their jobs and reskilling, but there are also new sectors to work with and support

- The erosion of trust has serious implications: from verifying student credentials to authenticating research. Can universities stand out as trusted actors?

Scenario 4: Advanced AI on a knife edge

Scenario 4: Advanced AI on a knife edge

A big lab launches a service badged as AGI and, despite scepticism, evidence seems to support the claim. Many beneficial applications emerge for businesses and people, which starts to boost economic growth and prosperity.

Scenario 4: Advanced AI on a knife edge

This system is seemingly able to complete almost any cognitive task without explicit training. For example, it possesses an impressively accurate real-world model and has even been connected to robotic systems to carry out physical tasks.

Scenario 4: Advanced AI on a knife edge

The increase in AI capability during the 2020s resulted in widespread adoption by businesses. Although this has caused disruption to labour markets, some employers are using tools to augment rather than displace workers and are using gains to implement shorter working weeks.

Scenario 4: Advanced AI on a knife edge

Most people are happy to integrate these systems into their daily lives in the form of advanced personal assistants. And given AI is also playing a role in solving big health challenges, many feel positive about its impacts on society.

Scenario 4: Advanced AI on a knife edge

However, with the recent development of an ‘AGI’, the public is becoming more aware of bigger disruptions on the horizon, including potential existential risks.

What does this mean for universities?

New opportunities

Prosperous complacency

Research shifts

- Shorter working weeks create new opportunities for universities in lifelong learning, personal development, and leisure education as people have more free time.

- Universities have benefitted from the rising tide of prosperity, but have they become complacent? Are they helping to shape the future? Are their business models able to withstand AGI? Have some specialised in, for example, ethical reasoning, creativity, human oversight of AI systems?

- Research excellence shifts dramatically toward large tech companies with AGI capabilities, with universities becoming secondary partners or applied research centres – to a greater extent than in scenario 2.

Scenario 5: AI disappoints

Scenario 5: AI disappoints

AI capabilities have improved somewhat, but only just moving beyond advanced generative AI and incremental roll out of narrow tools to solve specific problems.

Scenario 5: AI disappoints

Many businesses have also struggled with barriers to effective AI use. Investors are disappointed and looking for the next big development.

Scenario 5: AI disappoints

There is mixed uptake, with some benefiting, and others falling victim to malicious use, but most feel indifferent towards AI.

Scenario 5: AI disappoints

Those few with the right skills enjoy the benefits of AI but many struggle to learn how to effectively use the temperamental AI tools on offer.

Scenario 5: AI disappoints

Some companies marketing AI-based products avoid disclosing their use of AI, in case it impacts sales.

What does this mean for universities?

Productivity stagnation

Vindicated sector

Emerging technologies

- Productivity continues to stagnate. The same questions continue to be asked of universities about employable graduates, knowledge exchange and so on.

- The sector’s cautious approach to AI adoption appears vindicated. Business models continue mostly unchanged. Universities that didn’t cut humanities, arts and social sciences courses see a resurgence.

- Research shifts toward alternative emerging technologies like quantum computing and fusion energy, benefiting institutions with strengths in these areas.

Now it’s poll time. I have three questions for you to answer. I asked AI to turn these into movie slogans, so you’re able to remember them easily – I’ll paste these into the chat.

Which scenario do you think is most likely for 2030?

1: Unpredictable Advanced AI

2: AI disrupts the workforce

3: AI ‘wild west’

4: Advanced AI on a knife edge

5: AI disappoints

Which scenario do you personally hope for?

1: Unpredictable Advanced AI

2: AI disrupts the workforce

3: AI ‘wild west’

4: Advanced AI on a knife edge

5: AI disappoints

Which scenario should your university prepare for?

1: Unpredictable Advanced AI

2: AI disrupts the workforce

3: AI ‘wild west’

4: Advanced AI on a knife edge

5: AI disappoints

(This may or may not be the scenario you voted most likely!)

We now return to my somewhat tenuous link to Sim University. I think that we should be running SimCity style scenarios for our universities. Most universities already do modelling or scenarios work, especially around finances and student recruitment and so on.

Let’s swap the SimCity scenario of aliens landing in your city to, for example, AI replacing 70% of local labour markets. Scenarios should force you to engage with the unexpected in a safe environment, with some randomness, with incomplete information.

They force you to make tough decisions, to invest. And to think of your environment as a system that you need to strengthen and work with, considering second- and third-order effects and how these in turn affect you.

Society, of course, can’t be reduced to a game, however complex it may be. But this can be a useful exercise. You might find a 10% likelihood of an extreme transformation, but this would change 90% of what you do. What do you do next?

Key takeaway

Universities will fail in their civic duty if they do not engage with possible future trajectories of AI

I will finish where we started: with the key takeaway. And just a reminder, because I can see how this could be misunderstood, that what I’m saying is that universities need to take this seriously even if you ultimately decide that there won’t be significant change. Or you might decide that some changes, some preparation, is needed.

Discussion

[email protected]

linkedin.com/in/ransomjames

Thanks for listening (or reading this!); please keep in contact.

Cover image credit: Zoya Yasmine / Better Images of AI / The Two Cultures / CC-BY 4.0